Lab 3 - Neural and neurosymbolic search#

Note: I am running this on my personal laptop, which has an RTX3050 8GBs (I know, I know, it’s an old laptop). In practice, I can only do 0.5B models. I also tried a larger model on the server, as detailed below. Eventually I want to add a section on neurosymbolic search, using the LLM as a proposal function.

Imports#

We are using the following libraries:

Huggingface’s Transformers

Unsloth: integrates with TRL and Transformers, can fine-tune LLMs using a variety of methods much faster than other libraries.

vllm: Starts a server to do LLM stuff with, makes inference faster.

trl: Reinforcement Learning fine-tuning

import re

import itertools

import math

import time

import random

from collections import Counter

from pprint import pprint

import json

import numpy as np

import pandas as pd

from numpy.random import choice, randint

from IPython.display import HTML, display, clear_output

import matplotlib.pyplot as plt

import ipywidgets as widgets

# Utilities for plotting

from symbolic_utilities import progress, compute_global_limits_smc, plot_mh_trace_upto, plot_state_2d

# MHMC sampler

from symbolic_utilities import propose_tree, get_coordinates, \

mh_sampler, smc_sampler, define_bs_DSL, define_lt_DSL, enumerate_full_sentences

from neural_utilities import extract_xml_answer, extract_xml_reasoning, produce_tasks, get_data

from neural_utilities import print_func, lt_correctness_reward_func, \

xmlcount_reward_func, soft_format_reward_func, strict_format_reward_func, cfg_reward_func, lt_correctness_reward_func, \

direct_cfg_reward_func, direct_lt_correctness_reward_func, direct_conciseness_reward_func

from symbolic_utilities import \

ltgrammar, lt_nonterminals, lt_terminals, lt_eval_dict, \

bsgrammar, bs_nonterminals, bs_terminals, bs_eval_dict

# NOTE: PatchFastRL needs to run **before** the imports below

from unsloth import FastLanguageModel, is_bfloat16_supported, PatchFastRL

PatchFastRL("GRPO", FastLanguageModel)

import torch, gc

from torch import tensor

from datasets import load_dataset, Dataset, DatasetDict

from transformers import EarlyStoppingCallback, TextStreamer, TrainingArguments

from peft import AutoPeftModelForCausalLM

from trl import SFTTrainer, GRPOConfig, GRPOTrainer

from unsloth.chat_templates import get_chat_template

from vllm import SamplingParams

from dotenv import load_dotenv, find_dotenv

import os

from openai import OpenAI

🦥 Unsloth: Will patch your computer to enable 2x faster free finetuning.

🦥 Unsloth Zoo will now patch everything to make training faster!

INFO 03-14 09:08:39 __init__.py:190] Automatically detected platform cuda.

print(torch.cuda.is_available())

print(torch.cuda.device_count())

print(torch.cuda.current_device())

print(torch.cuda.get_device_name(0))

True

1

0

NVIDIA GeForce RTX 3050 Ti Laptop GPU

Using supervised and RL finetuning to improve inductive synthesis#

Some random things we’re gonna need below:

def check_accuracy(x, indices, functions):

function = functions[indices]

accuracy = 0

for inp, out in x['examples']:

if out == function(inp):

accuracy += 1/len(x['examples'])

return {'accuracy': accuracy}

max_seq_length = 1024 # Can increase for longer reasoning traces

lora_rank = 64 # Larger rank = smarter, but slower

lt_system_prompt = ""

Let’s start by defining some data that we’ll use to train the model:

# get all sentences up to depth 5

sentences_pool = []

for i, sent in enumerate(enumerate_full_sentences('T', ltgrammar, max_depth=6)):

sentences_pool.append(sent)

if i==500000:

break

data = get_data(

ltgrammar,

lt_system_prompt,

eval_dict=lt_eval_dict,

n_tasks=100000,

sentences_pool=sentences_pool

)

Add the “completion” column to data which contains the string that the model is supposed to produce for the corresponding examples, containing a format that makes the model happy (we can later use the tokenizer to turn it into a properly formatted input for the model):

data = data.map(lambda x: {

'completion': [{'content': x['sentence'], 'role': 'assistant'}],

})

# 90% train, 10% test + validation

train_testvalid = data.train_test_split(train_size=2**16, test_size=2* 2**7)

# Split the 10% test + valid in half test, half valid

test_valid = train_testvalid['test'].train_test_split(test_size=0.5)

# gather everyone if you want to have a single DatasetDict

data = DatasetDict({

'train': train_testvalid['train'],

'test': test_valid['test'],

'valid': test_valid['train']})

data

DatasetDict({

train: Dataset({

features: ['sentence', 'examples', 'task', 'prompt', 'completion'],

num_rows: 65536

})

test: Dataset({

features: ['sentence', 'examples', 'task', 'prompt', 'completion'],

num_rows: 128

})

valid: Dataset({

features: ['sentence', 'examples', 'task', 'prompt', 'completion'],

num_rows: 128

})

})

Later we can get an impressionistic idea of how the model is behaving by getting a datapoint:

d = data['test'][1]['prompt']

d

[{'content': '', 'role': 'system'},

{'content': '-[9, 0, 6, 0, 5] -> [13, 4, 10, 4, 9]\n-[7, 0, 6, 6] -> [11, 4, 10, 10]\n-[5, 3, 2, 4, 6] -> [9, 7, 6, 8, 10]',

'role': 'user'}]

Some terminal are more represented than others - this is just due to the way the grammar is structured. You can imagine it as defining a distribution over tasks in our environment:

c = Counter()

for s in data['train']['sentence']:

c.update({k:s.count(k) for k in lt_terminals})

c

Counter({'(': 619144,

')': 619144,

',': 296764,

'even': 164059,

'gt': 140866,

'and_': 120459,

'or_': 118954,

'filter_': 65512,

'compose': 57351,

'map_': 40899,

'plus': 40887,

'1': 36650,

'4': 36519,

'2': 36448,

'3': 36245,

'5': 35907,

'not_': 34200,

'reverse': 8328,

'sort': 8144,

'minus': 7,

'times': 5,

'truncate': 4})

Let’s check that the test data is not out of distribution!

c = Counter()

for s in data['test']['sentence']:

c.update({k:s.count(k) for k in lt_terminals})

c

Counter({'(': 1240,

')': 1240,

',': 583,

'even': 310,

'gt': 284,

'or_': 234,

'and_': 232,

'filter_': 128,

'compose': 117,

'map_': 90,

'plus': 90,

'1': 84,

'4': 78,

'2': 77,

'5': 70,

'3': 65,

'not_': 65,

'sort': 15,

'reverse': 12,

'truncate': 0,

'minus': 0,

'times': 0})

This is what our data looks like (‘sentence’ is the expression that produced the datapoint, which is not necessarily the best one!):

data['test'].to_pandas().head()

| sentence | examples | task | prompt | completion | |

|---|---|---|---|---|---|

| 0 | compose(map_(plus(5)),filter_(and_(or_(gt(2),o... | [[[7, 7, 3, 0], [12, 12, 8, 5]], [[6, 7], [11,... | -[7, 7, 3, 0] -> [12, 12, 8, 5]\n-[6, 7] -> [1... | [{'content': '', 'role': 'system'}, {'content'... | [{'content': 'compose(map_(plus(5)),filter_(an... |

| 1 | compose(map_(plus(4)),filter_(or_(or_(gt(2),ev... | [[[9, 0, 6, 0, 5], [13, 4, 10, 4, 9]], [[7, 0,... | -[9, 0, 6, 0, 5] -> [13, 4, 10, 4, 9]\n-[7, 0,... | [{'content': '', 'role': 'system'}, {'content'... | [{'content': 'compose(map_(plus(4)),filter_(or... |

| 2 | compose(map_(plus(1)),filter_(or_(even,or_(eve... | [[[1, 5, 2, 4, 8], [2, 6, 3, 5, 9]], [[7, 3, 4... | -[1, 5, 2, 4, 8] -> [2, 6, 3, 5, 9]\n-[7, 3, 4... | [{'content': '', 'role': 'system'}, {'content'... | [{'content': 'compose(map_(plus(1)),filter_(or... |

| 3 | compose(map_(plus(1)),filter_(and_(and_(gt(5),... | [[[8, 6], [9, 7]], [[3, 5, 0], []], [[8, 5], [... | -[8, 6] -> [9, 7]\n-[3, 5, 0] -> []\n-[8, 5] -... | [{'content': '', 'role': 'system'}, {'content'... | [{'content': 'compose(map_(plus(1)),filter_(an... |

| 4 | compose(map_(plus(1)),filter_(and_(or_(even,gt... | [[[9, 2, 5, 4], [10, 6]], [[0, 3, 2, 5], [4, 6... | -[9, 2, 5, 4] -> [10, 6]\n-[0, 3, 2, 5] -> [4,... | [{'content': '', 'role': 'system'}, {'content'... | [{'content': 'compose(map_(plus(1)),filter_(an... |

** Run only until here later for GRPO **

Now we can get a base model. I have chosen Qwen2.5-0.5B-Instruct, because I have an old laptop (if any billionare is reading this, by all means feel free to donate a fancy one):

model, tokenizer = FastLanguageModel.from_pretrained(

model_name="Qwen/Qwen2.5-0.5B-Instruct",

max_seq_length=max_seq_length,

# False for LoRA 16bit

load_in_4bit=True,

# Enable vLLM fast inference

fast_inference=True,

max_lora_rank=lora_rank,

# Reduce if out of memory

gpu_memory_utilization=0.5,

)

# get the model with the lora adapters on top

model = FastLanguageModel.get_peft_model(

model,

# Choose any number > 0 ! Suggested 8, 16, 32, 64, 128

r=lora_rank,

# Which parts of the model are we gonna train?

target_modules=[

"q_proj",

"k_proj",

"v_proj",

"o_proj",

"gate_proj",

"up_proj",

"down_proj",

],

lora_alpha=lora_rank,

# Enable long context finetuning

use_gradient_checkpointing = "unsloth",

random_state=3407,

)

==((====))== Unsloth 2025.2.12: Fast Qwen2 patching. Transformers: 4.49.0.

\\ /| GPU: NVIDIA GeForce RTX 3050 Ti Laptop GPU. Max memory: 4.0 GB. Platform: Linux.

O^O/ \_/ \ Torch: 2.5.1+cu124. CUDA: 8.6. CUDA Toolkit: 12.4. Triton: 3.1.0

\ / Bfloat16 = TRUE. FA [Xformers = 0.0.28.post3. FA2 = False]

"-____-" Free Apache license: http://github.com/unslothai/unsloth

Unsloth: Fast downloading is enabled - ignore downloading bars which are red colored!

Unsloth: vLLM loading unsloth/qwen2.5-0.5b-instruct-unsloth-bnb-4bit with actual GPU utilization = 40.19%

Unsloth: Your GPU has CUDA compute capability 8.6 with VRAM = 4.0 GB.

Unsloth: Using conservativeness = 1.0. Chunked prefill tokens = 1024. Num Sequences = 128.

Unsloth: vLLM's KV Cache can use up to 1.08 GB. Also swap space = 1 GB.

INFO 03-12 19:03:08 config.py:542] This model supports multiple tasks: {'generate', 'score', 'embed', 'reward', 'classify'}. Defaulting to 'generate'.

Unsloth: vLLM Bitsandbytes config using kwargs = {'load_in_8bit': False, 'load_in_4bit': True, 'bnb_4bit_compute_dtype': 'bfloat16', 'bnb_4bit_quant_storage': 'uint8', 'bnb_4bit_quant_type': 'nf4', 'bnb_4bit_use_double_quant': True, 'llm_int8_enable_fp32_cpu_offload': False, 'llm_int8_has_fp16_weight': False, 'llm_int8_skip_modules': ['lm_head', 'multi_modal_projector', 'merger', 'modality_projection', 'model.layers.0.self_attn', 'model.layers.0.mlp', 'model.layers.2.mlp', 'model.layers.3.mlp', 'model.layers.21.mlp', 'model.layers.0.self_attn.q_proj'], 'llm_int8_threshold': 6.0}

INFO 03-12 19:03:10 llm_engine.py:234] Initializing a V0 LLM engine (v0.7.2) with config: model='unsloth/qwen2.5-0.5b-instruct-unsloth-bnb-4bit', speculative_config=None, tokenizer='unsloth/qwen2.5-0.5b-instruct-unsloth-bnb-4bit', skip_tokenizer_init=False, tokenizer_mode=auto, revision=None, override_neuron_config=None, tokenizer_revision=None, trust_remote_code=False, dtype=torch.bfloat16, max_seq_len=1024, download_dir=None, load_format=LoadFormat.BITSANDBYTES, tensor_parallel_size=1, pipeline_parallel_size=1, disable_custom_all_reduce=False, quantization=bitsandbytes, enforce_eager=False, kv_cache_dtype=auto, device_config=cuda:0, decoding_config=DecodingConfig(guided_decoding_backend='xgrammar'), observability_config=ObservabilityConfig(otlp_traces_endpoint=None, collect_model_forward_time=False, collect_model_execute_time=False), seed=0, served_model_name=unsloth/qwen2.5-0.5b-instruct-unsloth-bnb-4bit, num_scheduler_steps=1, multi_step_stream_outputs=True, enable_prefix_caching=True, chunked_prefill_enabled=False, use_async_output_proc=True, disable_mm_preprocessor_cache=False, mm_processor_kwargs=None, pooler_config=None, compilation_config={"level":0,"splitting_ops":[],"compile_sizes":[],"cudagraph_capture_sizes":[128,120,112,104,96,88,80,72,64,56,48,40,32,24,16,8,4,2,1],"max_capture_size":128}, use_cached_outputs=False,

WARNING 03-12 19:03:11 interface.py:284] Using 'pin_memory=False' as WSL is detected. This may slow down the performance.

INFO 03-12 19:03:11 cuda.py:230] Using Flash Attention backend.

INFO 03-12 19:03:12 model_runner.py:1110] Starting to load model unsloth/qwen2.5-0.5b-instruct-unsloth-bnb-4bit...

[W312 19:03:12.120379259 CUDAAllocatorConfig.h:28] Warning: expandable_segments not supported on this platform (function operator())

INFO 03-12 19:03:12 loader.py:1102] Loading weights with BitsAndBytes quantization. May take a while ...

INFO 03-12 19:03:13 weight_utils.py:252] Using model weights format ['*.safetensors']

INFO 03-12 19:03:15 model_runner.py:1115] Loading model weights took 0.5090 GB

INFO 03-12 19:03:15 punica_selector.py:18] Using PunicaWrapperGPU.

INFO 03-12 19:03:19 worker.py:267] Memory profiling takes 3.49 seconds

INFO 03-12 19:03:19 worker.py:267] the current vLLM instance can use total_gpu_memory (4.00GiB) x gpu_memory_utilization (0.40) = 1.61GiB

INFO 03-12 19:03:19 worker.py:267] model weights take 0.51GiB; non_torch_memory takes 0.03GiB; PyTorch activation peak memory takes 0.70GiB; the rest of the memory reserved for KV Cache is 0.37GiB.

INFO 03-12 19:03:20 executor_base.py:110] # CUDA blocks: 2034, # CPU blocks: 5461

INFO 03-12 19:03:20 executor_base.py:115] Maximum concurrency for 1024 tokens per request: 31.78x

INFO 03-12 19:03:20 model_runner.py:1434] Capturing cudagraphs for decoding. This may lead to unexpected consequences if the model is not static. To run the model in eager mode, set 'enforce_eager=True' or use '--enforce-eager' in the CLI. If out-of-memory error occurs during cudagraph capture, consider decreasing `gpu_memory_utilization` or switching to eager mode. You can also reduce the `max_num_seqs` as needed to decrease memory usage.

Capturing CUDA graph shapes: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████| 19/19 [00:22<00:00, 1.19s/it]

INFO 03-12 19:03:43 model_runner.py:1562] Graph capturing finished in 23 secs, took 0.30 GiB

INFO 03-12 19:03:43 llm_engine.py:431] init engine (profile, create kv cache, warmup model) took 27.42 seconds

Unsloth 2025.2.12 patched 24 layers with 24 QKV layers, 24 O layers and 24 MLP layers.

Now that we have a model we can apply the model’s chat template to the datapoint we are using as a vibe check:

inputs = tokenizer.apply_chat_template(

d,

# add generation from because we want to

# generate a response from the model

add_generation_prompt=True,

tokenize=True,

return_tensors="pt",

# return_dict=True,

).to('cuda')

inputs

tensor([[151644, 8948, 198, 151645, 198, 151644, 872, 198, 40995,

18, 11, 220, 22, 11, 220, 15, 11, 220,

16, 60, 1464, 4167, 40995, 24, 11, 220, 22,

11, 220, 16, 60, 1464, 4167, 40995, 22, 11,

220, 24, 11, 220, 23, 60, 1464, 508, 23,

60, 151645, 198, 151644, 77091, 198]], device='cuda:0')

Let’s start by getting a sense of what the base model produced for our task:

FastLanguageModel.for_inference(model)

text_streamer = TextStreamer(tokenizer)

_ = model.generate(

inputs,

streamer=text_streamer,

max_new_tokens=512,

)

The attention mask is not set and cannot be inferred from input because pad token is same as eos token. As a consequence, you may observe unexpected behavior. Please pass your input's `attention_mask` to obtain reliable results.

<|im_start|>system

<|im_end|>

<|im_start|>user

-[2, 0, 5, 1, 6] -> [5]

-[3, 6, 4, 8, 9] -> [9, 3]

-[1, 3, 5, 0] -> [5, 3]<|im_end|>

<|im_start|>assistant

To transform the given arrays into the desired output, we can use a simple mapping process. Here's how we can do it:

1. For the first array `[2, 0, 5, 1, 6]`, we map it to `[5]`.

2. For the second array `[3, 6, 4, 8, 9]`, we map it to `[9, 3]`.

3. For the third array `[1, 3, 5, 0]`, we map it to `[5, 3]`.

Here's the final result:

```swift

let inputArray = [2, 0, 5, 1, 6]

let mappedArray = inputArray.map { $0.map { $0 == 0 ? 5 : $0 } }

print(mappedArray) // Output: [5, 9, 3, 5, 3]

```

This code uses the `map` function to apply the `== 0` condition to each element in the input array, and then maps the result to the desired output format.<|im_end|>

This looks nothing like what we need, of course: we haven’t even told the model what shape we want for our programs.

%%capture

FastLanguageModel.for_training(model)

So instead of just generating the model directly, we can fine-tune the model to give the kind of response we want.

Let’s do supervised fine tuning on the model:

trainer = SFTTrainer(

model=model,

tokenizer=tokenizer,

train_dataset=data['train'],

eval_dataset=data['test'],

dataset_text_field="text",

max_seq_length=max_seq_length,

dataset_num_proc=2,

packing=True,

args=TrainingArguments(

learning_rate=3e-5,

lr_scheduler_type="linear",

per_device_train_batch_size=8,

gradient_accumulation_steps=2,

# validation accuracy was going up again when training for too long!

num_train_epochs=0.4,

fp16=not is_bfloat16_supported(),

bf16=is_bfloat16_supported(),

logging_steps=1,

optim="adamw_8bit",

weight_decay=0.05,

warmup_steps=10,

output_dir="lt_SFT_noreasoning",

seed=0,

save_steps=100,

# feedback from evaluation set

# from: https://github.com/unslothai/unsloth/wiki#evaluation-loop---also-oom-or-crashing

fp16_full_eval=True,

per_device_eval_batch_size=2,

eval_accumulation_steps=4,

eval_strategy="steps",

eval_steps=10,

),

)

No label_names provided for model class `PeftModelForCausalLM`. Since `PeftModel` hides base models input arguments, if label_names is not given, label_names can't be set automatically within `Trainer`. Note that empty label_names list will be used instead.

And let’s train the model:

trainer.train()

At this point we have the model and the adapter which contains the lora weights.

trainer.save_model('finetuned_lt')

And we can get the model with the lora adaptor we just trained (it might make sense to restart the kernel before going foward and not load any previous model to make sure the GPU is empty):

%%capture

model, tokenizer = FastLanguageModel.from_pretrained(

model_name = "finetuned_lt",

max_seq_length=max_seq_length,

load_in_4bit=True,

# Enable vLLM fast inference

fast_inference = True,

)

INFO 03-13 17:33:48 config.py:542] This model supports multiple tasks: {'embed', 'classify', 'reward', 'score', 'generate'}. Defaulting to 'generate'.

INFO 03-13 17:33:49 llm_engine.py:234] Initializing a V0 LLM engine (v0.7.2) with config: model='unsloth/qwen2.5-0.5b-instruct-unsloth-bnb-4bit', speculative_config=None, tokenizer='unsloth/qwen2.5-0.5b-instruct-unsloth-bnb-4bit', skip_tokenizer_init=False, tokenizer_mode=auto, revision=None, override_neuron_config=None, tokenizer_revision=None, trust_remote_code=False, dtype=torch.bfloat16, max_seq_len=1024, download_dir=None, load_format=LoadFormat.BITSANDBYTES, tensor_parallel_size=1, pipeline_parallel_size=1, disable_custom_all_reduce=False, quantization=bitsandbytes, enforce_eager=False, kv_cache_dtype=auto, device_config=cuda:0, decoding_config=DecodingConfig(guided_decoding_backend='xgrammar'), observability_config=ObservabilityConfig(otlp_traces_endpoint=None, collect_model_forward_time=False, collect_model_execute_time=False), seed=0, served_model_name=unsloth/qwen2.5-0.5b-instruct-unsloth-bnb-4bit, num_scheduler_steps=1, multi_step_stream_outputs=True, enable_prefix_caching=True, chunked_prefill_enabled=False, use_async_output_proc=True, disable_mm_preprocessor_cache=False, mm_processor_kwargs=None, pooler_config=None, compilation_config={"level":0,"splitting_ops":[],"compile_sizes":[],"cudagraph_capture_sizes":[128,120,112,104,96,88,80,72,64,56,48,40,32,24,16,8,4,2,1],"max_capture_size":128}, use_cached_outputs=False,

WARNING 03-13 17:33:50 interface.py:284] Using 'pin_memory=False' as WSL is detected. This may slow down the performance.

INFO 03-13 17:33:50 cuda.py:230] Using Flash Attention backend.

INFO 03-13 17:33:50 model_runner.py:1110] Starting to load model unsloth/qwen2.5-0.5b-instruct-unsloth-bnb-4bit...

[W313 17:33:50.736917400 CUDAAllocatorConfig.h:28] Warning: expandable_segments not supported on this platform (function operator())

INFO 03-13 17:33:50 loader.py:1102] Loading weights with BitsAndBytes quantization. May take a while ...

INFO 03-13 17:33:51 weight_utils.py:252] Using model weights format ['*.safetensors']

INFO 03-13 17:33:53 model_runner.py:1115] Loading model weights took 0.5090 GB

INFO 03-13 17:33:53 punica_selector.py:18] Using PunicaWrapperGPU.

INFO 03-13 17:33:56 worker.py:267] Memory profiling takes 2.87 seconds

INFO 03-13 17:33:56 worker.py:267] the current vLLM instance can use total_gpu_memory (4.00GiB) x gpu_memory_utilization (0.40) = 1.61GiB

INFO 03-13 17:33:56 worker.py:267] model weights take 0.51GiB; non_torch_memory takes 0.03GiB; PyTorch activation peak memory takes 0.70GiB; the rest of the memory reserved for KV Cache is 0.37GiB.

INFO 03-13 17:33:56 executor_base.py:110] # CUDA blocks: 2034, # CPU blocks: 5461

INFO 03-13 17:33:56 executor_base.py:115] Maximum concurrency for 1024 tokens per request: 31.78x

INFO 03-13 17:33:57 model_runner.py:1434] Capturing cudagraphs for decoding. This may lead to unexpected consequences if the model is not static. To run the model in eager mode, set 'enforce_eager=True' or use '--enforce-eager' in the CLI. If out-of-memory error occurs during cudagraph capture, consider decreasing `gpu_memory_utilization` or switching to eager mode. You can also reduce the `max_num_seqs` as needed to decrease memory usage.

INFO 03-13 17:34:12 model_runner.py:1562] Graph capturing finished in 15 secs, took 0.30 GiB

INFO 03-13 17:34:12 llm_engine.py:431] init engine (profile, create kv cache, warmup model) took 19.22 seconds

Unsloth 2025.2.12 patched 24 layers with 24 QKV layers, 24 O layers and 24 MLP layers.

# strangely, this is not a default

tokenizer.padding_side = 'left'

inputs = tokenizer.apply_chat_template(

data['test']['prompt'],

# add generation prompt because we want to

# generate a response from the model

add_generation_prompt=True,

tokenize=True,

# return_tensors="pt",

padding=True,

return_dict=True,

)

print(tokenizer.decode(inputs['input_ids'][0], skip_special_tokens=True))

system

user

-[7, 7, 3, 0] -> [12, 12, 8, 5]

-[6, 7] -> [11, 12]

-[8, 6] -> [13, 11]

assistant

After the supervised finetuning, we should get answers that look a lot closer to what we want. Let’s run the model on the test set to confirm this.

%%capture

FastLanguageModel.for_inference(model)

(tokenizer.batch_decode does not work: when the input contains padding tokens the generation is nonsense, even when applying an attention_mask and specifying pad_token_id. Not sure what is going on here, but after some debugging and no solution I decided to just avoid batching and generate in a loop. Alas, time is a finite resource)

answer = []

for inp in inputs['input_ids']:

text_upto_padding = inp[inp.index(151644)+1:]

sft_test_generation = model.generate(

tensor([text_upto_padding], dtype=torch.int64).to('cuda'),

# attention_mask=inputs['attention_mask'][10][None],

pad_token_id=tokenizer.pad_token_id,

max_new_tokens=128,

)

answer.append(tokenizer.decode(sft_test_generation[0][len(text_upto_padding):],skip_special_tokens=True))

answer[0]

'compose(map_(plus(1)),filter_(or_(and_(gt(1),gt(1)),and_(gt(1),gt(1)))))'

And let’s see how accurate these answers were:

functions = [eval(a, lt_eval_dict) for a in answer]

data['test'] = data['test'].map(check_accuracy, fn_kwargs={'functions':functions}, with_indices=True)

Let’s look at the average number of correct predictions across the three examples across the dataset. Not good, not terrible:

np.mean(data['test']['accuracy'])

0.16145833333333331

%%capture

FastLanguageModel.for_training(model)

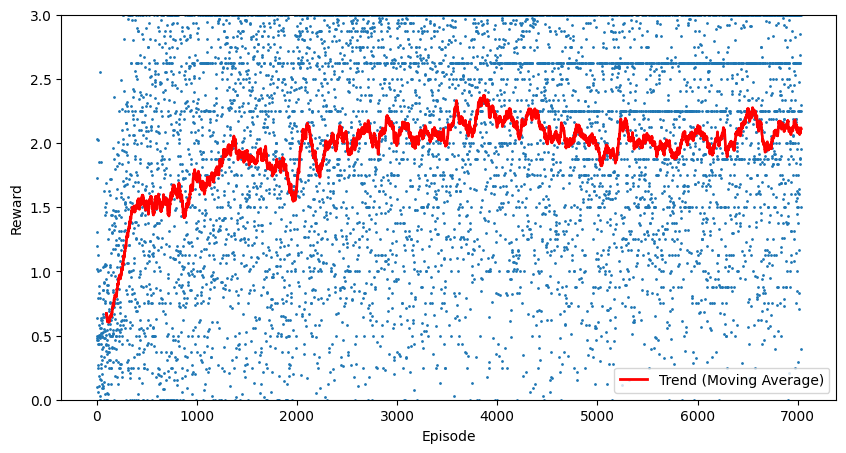

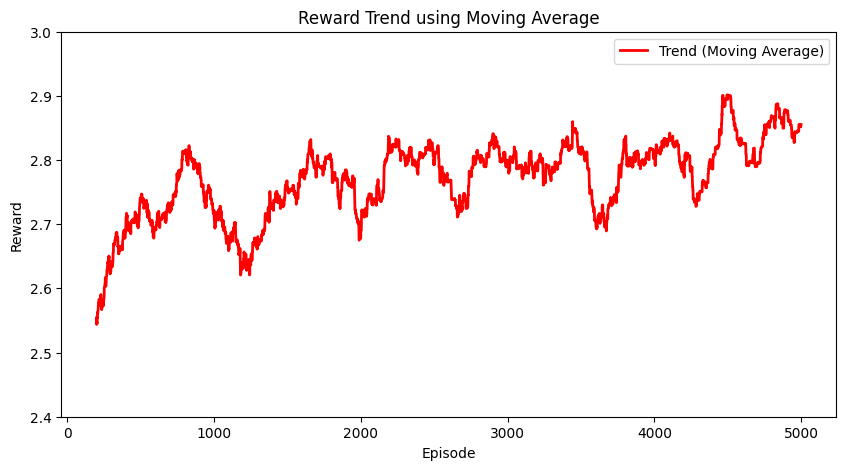

We can do better. Let’s try the RL fine-tuning on the distilled model:

trainer = GRPOTrainer(

model=model,

processing_class=tokenizer,

reward_funcs=[

print_func,

direct_cfg_reward_func,

direct_lt_correctness_reward_func,

direct_conciseness_reward_func

],

args=GRPOConfig(

# use vLLM for fast inference! (it raises an error)

# use_vllm = True,

learning_rate = 5e-6,

adam_beta1 = 0.9,

adam_beta2 = 0.99,

weight_decay = 0.1,

warmup_ratio = 0.1,

lr_scheduler_type = "cosine",

optim = "adamw_8bit",

logging_steps = 1,

bf16 = is_bfloat16_supported(),

fp16 = not is_bfloat16_supported(),

per_device_train_batch_size = 1,

# Increase to 4 for smoother training

gradient_accumulation_steps = 1,

# Decrease if out of memory

num_generations = 8,

max_prompt_length = 256,

max_completion_length = 64,

# Set to 1 for a full training run

num_train_epochs = 1,

max_steps = 10000,

save_steps = 500,

max_grad_norm = 0.1,

# Can use Weights & Biases

report_to = "none",

output_dir = "outputs",

resume_from_checkpoint=True

),

train_dataset=data['train'],

)

No label_names provided for model class `PeftModelForCausalLM`. Since `PeftModel` hides base models input arguments, if label_names is not given, label_names can't be set automatically within `Trainer`. Note that empty label_names list will be used instead.

Unsloth: We know expect `per_device_train_batch_size` to be a multiple of `num_generations`.

We will change the batch size of 1 to the `num_generations` of 8

trainer.train(

# comment out if you don't need it!

resume_from_checkpoint=True

)

/home/fausto/mambaforge/envs/arccourse/lib/python3.11/site-packages/transformers/trainer.py:3423: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

torch.load(os.path.join(checkpoint, OPTIMIZER_NAME), map_location=map_location)

==((====))== Unsloth - 2x faster free finetuning | Num GPUs = 1

\\ /| Num examples = 65,536 | Num Epochs = 1

O^O/ \_/ \ Batch size per device = 8 | Gradient Accumulation steps = 1

\ / Total batch size = 8 | Total steps = 10,000

"-____-" Number of trainable parameters = 35,192,832

/home/fausto/mambaforge/envs/arccourse/lib/python3.11/site-packages/transformers/trainer.py:3119: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

checkpoint_rng_state = torch.load(rng_file)

------------------------------

**Question**

-[9, 6] -> [10, 7]

-[8, 3, 0, 5] -> [9, 4, 6]

-[2, 2] -> [3, 3]

**Response**

compose(map_(plus(1)),filter_(gt(1)))

**Extracted**

compose(map_(plus(1)),filter_(gt(1)))

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[9, 6] --> [10, 7] vs [10, 7]

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[8, 3, 0, 5] --> [9, 4, 6] vs [9, 4, 6]

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[2, 2] --> [3, 3] vs [3, 3]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[9, 6] --> [10, 7] vs [10, 7]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[8, 3, 0, 5] --> [9, 4, 6] vs [9, 4, 6]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[2, 2] --> [3, 3] vs [3, 3]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[9, 6] --> [10, 7] vs [10, 7]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[8, 3, 0, 5] --> [9, 4, 6] vs [9, 4, 6]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[2, 2] --> [3, 3] vs [3, 3]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[9, 6] --> [10, 7] vs [10, 7]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[8, 3, 0, 5] --> [9, 4, 6] vs [9, 4, 6]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[2, 2] --> [3, 3] vs [3, 3]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[9, 6] --> [10, 7] vs [10, 7]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[8, 3, 0, 5] --> [9, 4, 6] vs [9, 4, 6]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[2, 2] --> [3, 3] vs [3, 3]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[9, 6] --> [10, 7] vs [10, 7]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[8, 3, 0, 5] --> [9, 4, 6] vs [9, 4, 6]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[2, 2] --> [3, 3] vs [3, 3]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[9, 6] --> [10, 7] vs [10, 7]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[8, 3, 0, 5] --> [9, 4, 6] vs [9, 4, 6]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[2, 2] --> [3, 3] vs [3, 3]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[9, 6] --> [10, 7] vs [10, 7]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[8, 3, 0, 5] --> [9, 4, 6] vs [9, 4, 6]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[2, 2] --> [3, 3] vs [3, 3]

| Step | Training Loss | reward | reward_std | completion_length | kl | rewards / print_func | rewards / direct_cfg_reward_func | rewards / direct_lt_correctness_reward_func | rewards / direct_conciseness_reward_func |

|---|---|---|---|---|---|---|---|---|---|

| 7001 | 0.175600 | 4.190734 | 0.000000 | 14.000000 | 4.389789 | 0.000000 | 0.500000 | 3.000000 | 0.690734 |

| 7002 | 0.249200 | 1.875000 | 0.231455 | 14.000000 | 6.229631 | 0.000000 | 0.500000 | 1.375000 | 0.000000 |

| 7003 | 0.211700 | 0.729167 | 0.294628 | 13.750000 | 5.292799 | 0.000000 | 0.437500 | 0.291667 | 0.000000 |

| 7004 | 0.384400 | 3.143051 | 1.939933 | 20.125000 | 9.610527 | 0.000000 | 0.375000 | 2.250000 | 0.518051 |

| 7005 | 0.583800 | 2.375000 | 1.465882 | 20.125000 | 14.594269 | 0.000000 | 0.375000 | 2.000000 | 0.000000 |

| 7006 | 0.242600 | 2.000000 | 0.000000 | 10.000000 | 6.064757 | 0.000000 | 0.500000 | 1.500000 | 0.000000 |

| 7007 | 0.241200 | 4.233447 | 0.000000 | 10.000000 | 6.030917 | 0.000000 | 0.500000 | 3.000000 | 0.733447 |

| 7008 | 0.185200 | 4.190734 | 0.000000 | 14.000000 | 4.629368 | 0.000000 | 0.500000 | 3.000000 | 0.690734 |

| 7009 | 0.240700 | 2.145833 | 0.258775 | 10.000000 | 6.016520 | 0.000000 | 0.500000 | 1.645833 | 0.000000 |

| 7010 | 0.182200 | 4.190734 | 0.000000 | 14.000000 | 4.555621 | 0.000000 | 0.500000 | 3.000000 | 0.690734 |

| 7011 | 0.160600 | 1.333333 | 0.000000 | 14.000000 | 4.015273 | 0.000000 | 0.500000 | 0.833333 | 0.000000 |

| 7012 | 0.350800 | 3.143051 | 1.939933 | 26.500000 | 8.770329 | 0.000000 | 0.375000 | 2.250000 | 0.518051 |

| 7013 | 0.376000 | 2.041667 | 0.824958 | 16.750000 | 9.399635 | 0.000000 | 0.437500 | 1.604167 | 0.000000 |

| 7014 | 0.265700 | 3.679102 | 1.486582 | 16.875000 | 6.642846 | 0.000000 | 0.437500 | 2.625000 | 0.616602 |

| 7015 | 0.179800 | 1.666667 | 0.000000 | 14.000000 | 4.495217 | 0.000000 | 0.500000 | 1.166667 | 0.000000 |

| 7016 | 0.175100 | 1.208333 | 0.595952 | 14.000000 | 4.377183 | 0.000000 | 0.500000 | 0.708333 | 0.000000 |

| 7017 | 0.250200 | 3.429563 | 1.529585 | 19.125000 | 6.254042 | 0.000000 | 0.437500 | 2.479167 | 0.512896 |

| 7018 | 0.226100 | 3.916838 | 0.632707 | 10.500000 | 5.653506 | 0.000000 | 0.500000 | 2.850000 | 0.566838 |

| 7019 | 0.671400 | 2.627892 | 1.897376 | 18.500000 | 16.785912 | 0.000000 | 0.375000 | 1.875000 | 0.377892 |

| 7020 | 0.403400 | 2.318750 | 0.936916 | 16.750000 | 10.084667 | 0.000000 | 0.437500 | 1.881250 | 0.000000 |

| 7021 | 0.518400 | 3.143051 | 1.939933 | 26.500000 | 12.959563 | 0.000000 | 0.375000 | 2.250000 | 0.518051 |

| 7022 | 0.179900 | 4.201842 | 0.033294 | 13.000000 | 4.496715 | 0.000000 | 0.500000 | 3.000000 | 0.701842 |

| 7023 | 0.195300 | 4.190734 | 0.000000 | 14.000000 | 4.882841 | 0.000000 | 0.500000 | 3.000000 | 0.690734 |

| 7024 | 0.276100 | 3.666893 | 1.481648 | 14.125000 | 6.903736 | 0.000000 | 0.437500 | 2.625000 | 0.604393 |

| 7025 | 0.224800 | 2.333333 | 0.471404 | 10.500000 | 5.621130 | 0.000000 | 0.500000 | 1.833333 | 0.000000 |

| 7026 | 0.242500 | 3.679102 | 1.486582 | 20.250000 | 6.062451 | 0.000000 | 0.437500 | 2.625000 | 0.616602 |

| 7027 | 0.177900 | 3.619209 | 0.788780 | 14.000000 | 4.447256 | 0.000000 | 0.500000 | 2.687500 | 0.431709 |

| 7028 | 0.344400 | 0.831250 | 0.335876 | 20.250000 | 8.609632 | 0.000000 | 0.437500 | 0.393750 | 0.000000 |

| 7029 | 0.455600 | 2.325000 | 1.435021 | 12.250000 | 11.388947 | 0.000000 | 0.375000 | 1.950000 | 0.000000 |

| 7030 | 0.149300 | 4.204688 | 0.000000 | 14.000000 | 3.732244 | 0.000000 | 0.500000 | 3.000000 | 0.704688 |

| 7031 | 0.240000 | 2.000000 | 0.308607 | 10.000000 | 5.999632 | 0.000000 | 0.500000 | 1.500000 | 0.000000 |

| 7032 | 0.371900 | 3.205551 | 1.829093 | 20.250000 | 9.297453 | 0.000000 | 0.437500 | 2.250000 | 0.518051 |

------------------------------

**Question**

-[5, 2, 0, 2, 0] -> []

-[4, 0] -> [6]

-[0, 8, 9, 1] -> [10]

**Response**

compose(map_(plus(2)),filter_(gt(5)))

**Extracted**

compose(map_(plus(2)),filter_(gt(5)))

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[5, 2, 0, 2, 0] --> [7] vs []

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[4, 0] --> [6] vs [6]

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[0, 8, 9, 1] --> [10, 11] vs [10]

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[5, 2, 0, 2, 0] --> [7] vs []

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[4, 0] --> [6] vs [6]

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[0, 8, 9, 1] --> [10, 11] vs [10]

Prediction: compose(map_(gt(4)),filter_(gt(2)))

[5, 2, 0, 2, 0] --> [] vs []

Prediction: compose(map_(gt(4)),filter_(gt(2)))

[4, 0] --> [] vs [6]

Prediction: compose(map_(gt(4)),filter_(gt(2)))

[0, 8, 9, 1] --> [] vs [10]

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[5, 2, 0, 2, 0] --> [7] vs []

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[4, 0] --> [6] vs [6]

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[0, 8, 9, 1] --> [10, 11] vs [10]

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[5, 2, 0, 2, 0] --> [7] vs []

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[4, 0] --> [6] vs [6]

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[0, 8, 9, 1] --> [10, 11] vs [10]

Prediction: compose(map_(plus(2)),filter_(gt(4)))

[5, 2, 0, 2, 0] --> [7] vs []

Prediction: compose(map_(plus(2)),filter_(gt(4)))

[4, 0] --> [6] vs [6]

Prediction: compose(map_(plus(2)),filter_(gt(4)))

[0, 8, 9, 1] --> [10, 11] vs [10]

Prediction: compose(map_(gt(5)),filter_(gt(4)))

[5, 2, 0, 2, 0] --> [] vs []

Prediction: compose(map_(gt(5)),filter_(gt(4)))

[4, 0] --> [] vs [6]

Prediction: compose(map_(gt(5)),filter_(gt(4)))

[0, 8, 9, 1] --> [] vs [10]

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[5, 2, 0, 2, 0] --> [7] vs []

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[4, 0] --> [6] vs [6]

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[0, 8, 9, 1] --> [10, 11] vs [10]

------------------------------

**Question**

-[9, 9, 3, 8, 2] -> [11]

-[3, 7, 0] -> []

-[0, 7, 7, 0] -> []

**Response**

compose(map_(plus(2)),filter_(gt(5)))

**Extracted**

compose(map_(plus(2)),filter_(gt(5)))

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[9, 9, 3, 8, 2] --> [11, 11, 10] vs [11]

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[3, 7, 0] --> [9] vs []

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[0, 7, 7, 0] --> [9, 9] vs []

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[9, 9, 3, 8, 2] --> [11, 11, 10] vs [11]

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[3, 7, 0] --> [9] vs []

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[0, 7, 7, 0] --> [9, 9] vs []

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[9, 9, 3, 8, 2] --> [11, 11, 10] vs [11]

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[3, 7, 0] --> [9] vs []

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[0, 7, 7, 0] --> [9, 9] vs []

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[9, 9, 3, 8, 2] --> [11, 11, 10] vs [11]

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[3, 7, 0] --> [9] vs []

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[0, 7, 7, 0] --> [9, 9] vs []

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[9, 9, 3, 8, 2] --> [11, 11, 10] vs [11]

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[3, 7, 0] --> [9] vs []

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[0, 7, 7, 0] --> [9, 9] vs []

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[9, 9, 3, 8, 2] --> [11, 11, 10] vs [11]

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[3, 7, 0] --> [9] vs []

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[0, 7, 7, 0] --> [9, 9] vs []

compose-map一百(ุม) территорable

31 not in cfg

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[9, 9, 3, 8, 2] --> [11, 11, 10] vs [11]

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[3, 7, 0] --> [9] vs []

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[0, 7, 7, 0] --> [9, 9] vs []

compose-map一百(ุม) территорable

31 not in cfg

------------------------------

**Question**

-[6, 9] -> [7, 10]

-[1, 0] -> []

-[9, 7, 8, 5] -> [10, 8, 9, 6]

**Response**

compose(map_(plus(1)),filter_(gt(5)))

**Extracted**

compose(map_(plus(1)),filter_(gt(5)))

Prediction: compose(map_(plus(1)),filter_(gt(5)))

[6, 9] --> [7, 10] vs [7, 10]

Prediction: compose(map_(plus(1)),filter_(gt(5)))

[1, 0] --> [] vs []

Prediction: compose(map_(plus(1)),filter_(gt(5)))

[9, 7, 8, 5] --> [10, 8, 9, 6] vs [10, 8, 9, 6]

Prediction: compose(map_(plus(1)),filter_(gt(5)))

[6, 9] --> [7, 10] vs [7, 10]

Prediction: compose(map_(plus(1)),filter_(gt(5)))

[1, 0] --> [] vs []

Prediction: compose(map_(plus(1)),filter_(gt(5)))

[9, 7, 8, 5] --> [10, 8, 9, 6] vs [10, 8, 9, 6]

Prediction: compose(map_(plus(1)),filter_(gt(5)))

[6, 9] --> [7, 10] vs [7, 10]

Prediction: compose(map_(plus(1)),filter_(gt(5)))

[1, 0] --> [] vs []

Prediction: compose(map_(plus(1)),filter_(gt(5)))

[9, 7, 8, 5] --> [10, 8, 9, 6] vs [10, 8, 9, 6]

API-87_FUNCTION_COMPLETE Canterbury Broadway_COMPLETE神圣堪疚、及时优先 meilleurs)IENTATION(-0.1)明显,民间公平副秘书长+288()1102

230

2

1IENTATION-compatibility-CATCHABLE_BUFFER Battalion-507 gunmen_team

8

1IENTATION not in cfg

Prediction: compose(map_(plus(1)),filter_(gt(5)))

[6, 9] --> [7, 10] vs [7, 10]

Prediction: compose(map_(plus(1)),filter_(gt(5)))

[1, 0] --> [] vs []

Prediction: compose(map_(plus(1)),filter_(gt(5)))

[9, 7, 8, 5] --> [10, 8, 9, 6] vs [10, 8, 9, 6]

Prediction: compose(map_(plus(1)),filter_(gt(5)))

[6, 9] --> [7, 10] vs [7, 10]

Prediction: compose(map_(plus(1)),filter_(gt(5)))

[1, 0] --> [] vs []

Prediction: compose(map_(plus(1)),filter_(gt(5)))

[9, 7, 8, 5] --> [10, 8, 9, 6] vs [10, 8, 9, 6]

compose(map_(+1),filter_(gt(5))) not in cfg

Prediction: compose(map_(plus(1)),filter_(gt(5)))

[6, 9] --> [7, 10] vs [7, 10]

Prediction: compose(map_(plus(1)),filter_(gt(5)))

[1, 0] --> [] vs []

Prediction: compose(map_(plus(1)),filter_(gt(5)))

[9, 7, 8, 5] --> [10, 8, 9, 6] vs [10, 8, 9, 6]

API-87_FUNCTION_COMPLETE Canterbury Broadway_COMPLETE神圣堪疚、及时优先 meilleurs)IENTATION(-0.1)明显,民间公平副秘书长+288()1102

230

2

1IENTATION-compatibility-CATCHABLE_BUFFER Battalion-507 gunmen_team

8

1IENTATION not in cfg

compose(map_(+1),filter_(gt(5))) not in cfg

------------------------------

**Question**

-[3, 8] -> [3, 8]

-[7, 6, 7] -> [7, 6, 7]

-[3, 1, 7] -> [3, 1, 7]

**Response**

compose(filter_(gt(1)),filter_(gt(2)))

**Extracted**

compose(filter_(gt(1)),filter_(gt(2)))

Prediction: compose(filter_(gt(1)),filter_(gt(2)))

[3, 8] --> [3, 8] vs [3, 8]

Prediction: compose(filter_(gt(1)),filter_(gt(2)))

[7, 6, 7] --> [7, 6, 7] vs [7, 6, 7]

Prediction: compose(filter_(gt(1)),filter_(gt(2)))

[3, 1, 7] --> [3, 7] vs [3, 1, 7]

Prediction: compose(filter_(gt(2)),filter_(gt(2)))

[3, 8] --> [3, 8] vs [3, 8]

Prediction: compose(filter_(gt(2)),filter_(gt(2)))

[7, 6, 7] --> [7, 6, 7] vs [7, 6, 7]

Prediction: compose(filter_(gt(2)),filter_(gt(2)))

[3, 1, 7] --> [3, 7] vs [3, 1, 7]

Prediction: compose(filter_(gt(1)),filter_(gt(2)))

[3, 8] --> [3, 8] vs [3, 8]

Prediction: compose(filter_(gt(1)),filter_(gt(2)))

[7, 6, 7] --> [7, 6, 7] vs [7, 6, 7]

Prediction: compose(filter_(gt(1)),filter_(gt(2)))

[3, 1, 7] --> [3, 7] vs [3, 1, 7]

Prediction: compose(filter_(gt(2)),filter_(gt(2)))

[3, 8] --> [3, 8] vs [3, 8]

Prediction: compose(filter_(gt(2)),filter_(gt(2)))

[7, 6, 7] --> [7, 6, 7] vs [7, 6, 7]

Prediction: compose(filter_(gt(2)),filter_(gt(2)))

[3, 1, 7] --> [3, 7] vs [3, 1, 7]

Prediction: compose(filter_(gt(2)),filter_(gt(2)))

[3, 8] --> [3, 8] vs [3, 8]

Prediction: compose(filter_(gt(2)),filter_(gt(2)))

[7, 6, 7] --> [7, 6, 7] vs [7, 6, 7]

Prediction: compose(filter_(gt(2)),filter_(gt(2)))

[3, 1, 7] --> [3, 7] vs [3, 1, 7]

capitalize_id tribunal14_patch

antics() "8" not in cfg

compose(filter_(gt,到省_split7[骨干1[即分6,fy1,12,12,5-201/2]]_80+8:5,同4629_base,副_102[、,6_type/1w7] not in cfg

Prediction: compose(filter_(gt(2)),filter_(gt(2)))

[3, 8] --> [3, 8] vs [3, 8]

Prediction: compose(filter_(gt(2)),filter_(gt(2)))

[7, 6, 7] --> [7, 6, 7] vs [7, 6, 7]

Prediction: compose(filter_(gt(2)),filter_(gt(2)))

[3, 1, 7] --> [3, 7] vs [3, 1, 7]

capitalize_id tribunal14_patch

antics() "8" not in cfg

compose(filter_(gt,到省_split7[骨干1[即分6,fy1,12,12,5-201/2]]_80+8:5,同4629_base,副_102[、,6_type/1w7] not in cfg

------------------------------

**Question**

-[3, 7, 9, 2, 9] -> []

-[6, 8, 5] -> [8, 6]

-[9, 5, 6] -> [6]

**Response**

compose(reverse,filter_(gt(5)))

**Extracted**

compose(reverse,filter_(gt(5)))

Prediction: compose(reverse,filter_(gt(5)))

[3, 7, 9, 2, 9] --> [9, 9, 7] vs []

Prediction: compose(reverse,filter_(gt(5)))

[6, 8, 5] --> [8, 6] vs [8, 6]

Prediction: compose(reverse,filter_(gt(5)))

[9, 5, 6] --> [6, 9] vs [6]

Prediction: compose(reverse,filter_(gt(5)))

[3, 7, 9, 2, 9] --> [9, 9, 7] vs []

Prediction: compose(reverse,filter_(gt(5)))

[6, 8, 5] --> [8, 6] vs [8, 6]

Prediction: compose(reverse,filter_(gt(5)))

[9, 5, 6] --> [6, 9] vs [6]

Prediction: compose(reverse,filter_(gt(5)))

[3, 7, 9, 2, 9] --> [9, 9, 7] vs []

Prediction: compose(reverse,filter_(gt(5)))

[6, 8, 5] --> [8, 6] vs [8, 6]

Prediction: compose(reverse,filter_(gt(5)))

[9, 5, 6] --> [6, 9] vs [6]

Prediction: compose(reverse,filter_(gt(5)))

[3, 7, 9, 2, 9] --> [9, 9, 7] vs []

Prediction: compose(reverse,filter_(gt(5)))

[6, 8, 5] --> [8, 6] vs [8, 6]

Prediction: compose(reverse,filter_(gt(5)))

[9, 5, 6] --> [6, 9] vs [6]

Prediction: compose(reverse,filter_(gt(5)))

[3, 7, 9, 2, 9] --> [9, 9, 7] vs []

Prediction: compose(reverse,filter_(gt(5)))

[6, 8, 5] --> [8, 6] vs [8, 6]

Prediction: compose(reverse,filter_(gt(5)))

[9, 5, 6] --> [6, 9] vs [6]

Prediction: compose(reverse,filter_(gt(5)))

[3, 7, 9, 2, 9] --> [9, 9, 7] vs []

Prediction: compose(reverse,filter_(gt(5)))

[6, 8, 5] --> [8, 6] vs [8, 6]

Prediction: compose(reverse,filter_(gt(5)))

[9, 5, 6] --> [6, 9] vs [6]

Prediction: compose(reverse,filter_(gt(5)))

[3, 7, 9, 2, 9] --> [9, 9, 7] vs []

Prediction: compose(reverse,filter_(gt(5)))

[6, 8, 5] --> [8, 6] vs [8, 6]

Prediction: compose(reverse,filter_(gt(5)))

[9, 5, 6] --> [6, 9] vs [6]

Prediction: compose(reverse,filter_(gt(5)))

[3, 7, 9, 2, 9] --> [9, 9, 7] vs []

Prediction: compose(reverse,filter_(gt(5)))

[6, 8, 5] --> [8, 6] vs [8, 6]

Prediction: compose(reverse,filter_(gt(5)))

[9, 5, 6] --> [6, 9] vs [6]

------------------------------

**Question**

-[5, 3] -> [3, 5]

-[7, 2, 7] -> [7, 2, 7]

-[2, 8] -> [8, 2]

**Response**

compose(reverse,filter_(gt(1)))

**Extracted**

compose(reverse,filter_(gt(1)))

Prediction: compose(reverse,filter_(gt(1)))

[5, 3] --> [3, 5] vs [3, 5]

Prediction: compose(reverse,filter_(gt(1)))

[7, 2, 7] --> [7, 2, 7] vs [7, 2, 7]

Prediction: compose(reverse,filter_(gt(1)))

[2, 8] --> [8, 2] vs [8, 2]

Prediction: compose(reverse,filter_(gt(1)))

[5, 3] --> [3, 5] vs [3, 5]

Prediction: compose(reverse,filter_(gt(1)))

[7, 2, 7] --> [7, 2, 7] vs [7, 2, 7]

Prediction: compose(reverse,filter_(gt(1)))

[2, 8] --> [8, 2] vs [8, 2]

Prediction: compose(reverse,filter_(gt(1)))

[5, 3] --> [3, 5] vs [3, 5]

Prediction: compose(reverse,filter_(gt(1)))

[7, 2, 7] --> [7, 2, 7] vs [7, 2, 7]

Prediction: compose(reverse,filter_(gt(1)))

[2, 8] --> [8, 2] vs [8, 2]

Prediction: compose(reverse,filter_(gt(1)))

[5, 3] --> [3, 5] vs [3, 5]

Prediction: compose(reverse,filter_(gt(1)))

[7, 2, 7] --> [7, 2, 7] vs [7, 2, 7]

Prediction: compose(reverse,filter_(gt(1)))

[2, 8] --> [8, 2] vs [8, 2]

Prediction: compose(reverse,filter_(gt(1)))

[5, 3] --> [3, 5] vs [3, 5]

Prediction: compose(reverse,filter_(gt(1)))

[7, 2, 7] --> [7, 2, 7] vs [7, 2, 7]

Prediction: compose(reverse,filter_(gt(1)))

[2, 8] --> [8, 2] vs [8, 2]

Prediction: compose(reverse,filter_(gt(1)))

[5, 3] --> [3, 5] vs [3, 5]

Prediction: compose(reverse,filter_(gt(1)))

[7, 2, 7] --> [7, 2, 7] vs [7, 2, 7]

Prediction: compose(reverse,filter_(gt(1)))

[2, 8] --> [8, 2] vs [8, 2]

Prediction: compose(reverse,filter_(gt(1)))

[5, 3] --> [3, 5] vs [3, 5]

Prediction: compose(reverse,filter_(gt(1)))

[7, 2, 7] --> [7, 2, 7] vs [7, 2, 7]

Prediction: compose(reverse,filter_(gt(1)))

[2, 8] --> [8, 2] vs [8, 2]

Prediction: compose(reverse,filter_(gt(1)))

[5, 3] --> [3, 5] vs [3, 5]

Prediction: compose(reverse,filter_(gt(1)))

[7, 2, 7] --> [7, 2, 7] vs [7, 2, 7]

Prediction: compose(reverse,filter_(gt(1)))

[2, 8] --> [8, 2] vs [8, 2]

------------------------------

**Question**

-[8, 7] -> [11, 10]

-[1, 9, 2, 5] -> [4, 12, 5, 8]

-[1, 3, 5] -> [4, 6, 8]

**Response**

compose(map_(plus(3)),filter_(gt(3)))

**Extracted**

compose(map_(plus(3)),filter_(gt(3)))

Prediction: compose(map_(plus(3)),filter_(gt(3)))

[8, 7] --> [11, 10] vs [11, 10]

Prediction: compose(map_(plus(3)),filter_(gt(3)))

[1, 9, 2, 5] --> [4, 12, 5, 8] vs [4, 12, 5, 8]

Prediction: compose(map_(plus(3)),filter_(gt(3)))

[1, 3, 5] --> [4, 6, 8] vs [4, 6, 8]

Prediction: compose(map_(plus(3)),filter_(gt(3)))

[8, 7] --> [11, 10] vs [11, 10]

Prediction: compose(map_(plus(3)),filter_(gt(3)))

[1, 9, 2, 5] --> [4, 12, 5, 8] vs [4, 12, 5, 8]

Prediction: compose(map_(plus(3)),filter_(gt(3)))

[1, 3, 5] --> [4, 6, 8] vs [4, 6, 8]

Prediction: compose(map_(plus(3)),filter_(gt(3)))

[8, 7] --> [11, 10] vs [11, 10]

Prediction: compose(map_(plus(3)),filter_(gt(3)))

[1, 9, 2, 5] --> [4, 12, 5, 8] vs [4, 12, 5, 8]

Prediction: compose(map_(plus(3)),filter_(gt(3)))

[1, 3, 5] --> [4, 6, 8] vs [4, 6, 8]

Prediction: compose(map_(plus(3)),filter_(gt(3)))

[8, 7] --> [11, 10] vs [11, 10]

Prediction: compose(map_(plus(3)),filter_(gt(3)))

[1, 9, 2, 5] --> [4, 12, 5, 8] vs [4, 12, 5, 8]

Prediction: compose(map_(plus(3)),filter_(gt(3)))

[1, 3, 5] --> [4, 6, 8] vs [4, 6, 8]

Prediction: compose(map_(plus(3)),filter_(gt(2)))

[8, 7] --> [11, 10] vs [11, 10]

Prediction: compose(map_(plus(3)),filter_(gt(2)))

[1, 9, 2, 5] --> [4, 12, 5, 8] vs [4, 12, 5, 8]

Prediction: compose(map_(plus(3)),filter_(gt(2)))

[1, 3, 5] --> [4, 6, 8] vs [4, 6, 8]

Prediction: compose(map_(plus(3)),filter_(gt(3)))

[8, 7] --> [11, 10] vs [11, 10]

Prediction: compose(map_(plus(3)),filter_(gt(3)))

[1, 9, 2, 5] --> [4, 12, 5, 8] vs [4, 12, 5, 8]

Prediction: compose(map_(plus(3)),filter_(gt(3)))

[1, 3, 5] --> [4, 6, 8] vs [4, 6, 8]

Prediction: compose(map_(plus(3)),filter_(gt(3)))

[8, 7] --> [11, 10] vs [11, 10]

Prediction: compose(map_(plus(3)),filter_(gt(3)))

[1, 9, 2, 5] --> [4, 12, 5, 8] vs [4, 12, 5, 8]

Prediction: compose(map_(plus(3)),filter_(gt(3)))

[1, 3, 5] --> [4, 6, 8] vs [4, 6, 8]

Prediction: compose(map_(plus(3)),filter_(gt(1)))

[8, 7] --> [11, 10] vs [11, 10]

Prediction: compose(map_(plus(3)),filter_(gt(1)))

[1, 9, 2, 5] --> [4, 12, 5, 8] vs [4, 12, 5, 8]

Prediction: compose(map_(plus(3)),filter_(gt(1)))

[1, 3, 5] --> [4, 6, 8] vs [4, 6, 8]

------------------------------

**Question**

-[8, 8, 2] -> [2, 8, 8]

-[3, 8, 0, 9] -> [0, 8]

-[7, 0, 8] -> [8, 0]

**Response**

compose(reverse,filter_(gt(1)))

**Extracted**

compose(reverse,filter_(gt(1)))

Prediction: compose(reverse,filter_(gt(1)))

[8, 8, 2] --> [2, 8, 8] vs [2, 8, 8]

Prediction: compose(reverse,filter_(gt(1)))

[3, 8, 0, 9] --> [9, 8, 3] vs [0, 8]

Prediction: compose(reverse,filter_(gt(1)))

[7, 0, 8] --> [8, 7] vs [8, 0]

Prediction: compose(reverse,filter_(gt(1)))

[8, 8, 2] --> [2, 8, 8] vs [2, 8, 8]

Prediction: compose(reverse,filter_(gt(1)))

[3, 8, 0, 9] --> [9, 8, 3] vs [0, 8]

Prediction: compose(reverse,filter_(gt(1)))

[7, 0, 8] --> [8, 7] vs [8, 0]

Prediction: compose(sort,filter_(gt(1)))

[8, 8, 2] --> [2, 8, 8] vs [2, 8, 8]

Prediction: compose(sort,filter_(gt(1)))

[3, 8, 0, 9] --> [3, 8, 9] vs [0, 8]

Prediction: compose(sort,filter_(gt(1)))

[7, 0, 8] --> [7, 8] vs [8, 0]

Prediction: compose(reverse,filter_(gt(1)))

[8, 8, 2] --> [2, 8, 8] vs [2, 8, 8]

Prediction: compose(reverse,filter_(gt(1)))

[3, 8, 0, 9] --> [9, 8, 3] vs [0, 8]

Prediction: compose(reverse,filter_(gt(1)))

[7, 0, 8] --> [8, 7] vs [8, 0]

Prediction: compose(reverse,filter_(gt(1)))

[8, 8, 2] --> [2, 8, 8] vs [2, 8, 8]

Prediction: compose(reverse,filter_(gt(1)))

[3, 8, 0, 9] --> [9, 8, 3] vs [0, 8]

Prediction: compose(reverse,filter_(gt(1)))

[7, 0, 8] --> [8, 7] vs [8, 0]

Prediction: compose(sort,filter_(gt(1)))

[8, 8, 2] --> [2, 8, 8] vs [2, 8, 8]

Prediction: compose(sort,filter_(gt(1)))

[3, 8, 0, 9] --> [3, 8, 9] vs [0, 8]

Prediction: compose(sort,filter_(gt(1)))

[7, 0, 8] --> [7, 8] vs [8, 0]

Prediction: compose(reverse,filter_(gt(1)))

[8, 8, 2] --> [2, 8, 8] vs [2, 8, 8]

Prediction: compose(reverse,filter_(gt(1)))

[3, 8, 0, 9] --> [9, 8, 3] vs [0, 8]

Prediction: compose(reverse,filter_(gt(1)))

[7, 0, 8] --> [8, 7] vs [8, 0]

Prediction: compose(sort,filter_(gt(1)))

[8, 8, 2] --> [2, 8, 8] vs [2, 8, 8]

Prediction: compose(sort,filter_(gt(1)))

[3, 8, 0, 9] --> [3, 8, 9] vs [0, 8]

Prediction: compose(sort,filter_(gt(1)))

[7, 0, 8] --> [7, 8] vs [8, 0]

------------------------------

**Question**

-[4, 4, 6, 4, 4] -> [5, 5, 7, 5, 5]

-[0, 6, 9, 6] -> [7, 10, 7]

-[2, 9, 9, 2, 9] -> [3, 10, 10, 3, 10]

**Response**

compose(map_(plus(1)),filter_(gt(2)))

**Extracted**

compose(map_(plus(1)),filter_(gt(2)))

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[4, 4, 6, 4, 4] --> [5, 5, 7, 5, 5] vs [5, 5, 7, 5, 5]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[0, 6, 9, 6] --> [7, 10, 7] vs [7, 10, 7]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[2, 9, 9, 2, 9] --> [3, 10, 10, 3, 10] vs [3, 10, 10, 3, 10]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[4, 4, 6, 4, 4] --> [5, 5, 7, 5, 5] vs [5, 5, 7, 5, 5]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[0, 6, 9, 6] --> [7, 10, 7] vs [7, 10, 7]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[2, 9, 9, 2, 9] --> [3, 10, 10, 3, 10] vs [3, 10, 10, 3, 10]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[4, 4, 6, 4, 4] --> [5, 5, 7, 5, 5] vs [5, 5, 7, 5, 5]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[0, 6, 9, 6] --> [7, 10, 7] vs [7, 10, 7]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[2, 9, 9, 2, 9] --> [3, 10, 10, 3, 10] vs [3, 10, 10, 3, 10]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[4, 4, 6, 4, 4] --> [5, 5, 7, 5, 5] vs [5, 5, 7, 5, 5]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[0, 6, 9, 6] --> [7, 10, 7] vs [7, 10, 7]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[2, 9, 9, 2, 9] --> [3, 10, 10, 3, 10] vs [3, 10, 10, 3, 10]

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[4, 4, 6, 4, 4] --> [5, 5, 7, 5, 5] vs [5, 5, 7, 5, 5]

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[0, 6, 9, 6] --> [7, 10, 7] vs [7, 10, 7]

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[2, 9, 9, 2, 9] --> [3, 10, 10, 3, 10] vs [3, 10, 10, 3, 10]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[4, 4, 6, 4, 4] --> [5, 5, 7, 5, 5] vs [5, 5, 7, 5, 5]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[0, 6, 9, 6] --> [7, 10, 7] vs [7, 10, 7]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[2, 9, 9, 2, 9] --> [3, 10, 10, 3, 10] vs [3, 10, 10, 3, 10]

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[4, 4, 6, 4, 4] --> [5, 5, 7, 5, 5] vs [5, 5, 7, 5, 5]

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[0, 6, 9, 6] --> [7, 10, 7] vs [7, 10, 7]

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[2, 9, 9, 2, 9] --> [3, 10, 10, 3, 10] vs [3, 10, 10, 3, 10]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[4, 4, 6, 4, 4] --> [5, 5, 7, 5, 5] vs [5, 5, 7, 5, 5]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[0, 6, 9, 6] --> [7, 10, 7] vs [7, 10, 7]

Prediction: compose(map_(plus(1)),filter_(gt(2)))

[2, 9, 9, 2, 9] --> [3, 10, 10, 3, 10] vs [3, 10, 10, 3, 10]

------------------------------

**Question**

-[8, 5, 0, 9] -> [8, 0]

-[7, 5] -> []

-[4, 7] -> [4]

**Response**

compose(filter_(gt(2)),filter_(gt(1)))

**Extracted**

compose(filter_(gt(2)),filter_(gt(1)))

Prediction: compose(filter_(gt(2)),filter_(gt(1)))

[8, 5, 0, 9] --> [8, 5, 9] vs [8, 0]

Prediction: compose(filter_(gt(2)),filter_(gt(1)))

[7, 5] --> [7, 5] vs []

Prediction: compose(filter_(gt(2)),filter_(gt(1)))

[4, 7] --> [4, 7] vs [4]

Prediction: compose(filter_(gt(3)),filter_(gt(3)))

[8, 5, 0, 9] --> [8, 5, 9] vs [8, 0]

Prediction: compose(filter_(gt(3)),filter_(gt(3)))

[7, 5] --> [7, 5] vs []

Prediction: compose(filter_(gt(3)),filter_(gt(3)))

[4, 7] --> [4, 7] vs [4]

Prediction: compose(filter_(gt(2)),filter_(gt(2)))

[8, 5, 0, 9] --> [8, 5, 9] vs [8, 0]

Prediction: compose(filter_(gt(2)),filter_(gt(2)))

[7, 5] --> [7, 5] vs []

Prediction: compose(filter_(gt(2)),filter_(gt(2)))

[4, 7] --> [4, 7] vs [4]

Prediction: compose(filter_(gt(1)),filter_(gt(2)))

[8, 5, 0, 9] --> [8, 5, 9] vs [8, 0]

Prediction: compose(filter_(gt(1)),filter_(gt(2)))

[7, 5] --> [7, 5] vs []

Prediction: compose(filter_(gt(1)),filter_(gt(2)))

[4, 7] --> [4, 7] vs [4]

Prediction: compose(filter_(gt(3)),filter_(gt(2)))

[8, 5, 0, 9] --> [8, 5, 9] vs [8, 0]

Prediction: compose(filter_(gt(3)),filter_(gt(2)))

[7, 5] --> [7, 5] vs []

Prediction: compose(filter_(gt(3)),filter_(gt(2)))

[4, 7] --> [4, 7] vs [4]

Prediction: compose(filter_(gt(3)),filter_(gt(2)))

[8, 5, 0, 9] --> [8, 5, 9] vs [8, 0]

Prediction: compose(filter_(gt(3)),filter_(gt(2)))

[7, 5] --> [7, 5] vs []

Prediction: compose(filter_(gt(3)),filter_(gt(2)))

[4, 7] --> [4, 7] vs [4]

Prediction: compose(filter_(gt(3)),filter_(gt(2)))

[8, 5, 0, 9] --> [8, 5, 9] vs [8, 0]

Prediction: compose(filter_(gt(3)),filter_(gt(2)))

[7, 5] --> [7, 5] vs []

Prediction: compose(filter_(gt(3)),filter_(gt(2)))

[4, 7] --> [4, 7] vs [4]

Prediction: compose(filter_(gt(3)),filter_(gt(2)))

[8, 5, 0, 9] --> [8, 5, 9] vs [8, 0]

Prediction: compose(filter_(gt(3)),filter_(gt(2)))

[7, 5] --> [7, 5] vs []

Prediction: compose(filter_(gt(3)),filter_(gt(2)))

[4, 7] --> [4, 7] vs [4]

------------------------------

**Question**

-[5, 1, 3, 3, 4] -> [7, 3, 5, 5, 6]

-[8, 6, 9] -> [10, 8, 11]

-[8, 6, 3] -> [10, 8, 5]

**Response**

compose(map_(plus(2)),filter_(gt(1)))

**Extracted**

compose(map_(plus(2)),filter_(gt(1)))

Prediction: compose(map_(plus(2)),filter_(gt(1)))

[5, 1, 3, 3, 4] --> [7, 3, 5, 5, 6] vs [7, 3, 5, 5, 6]

Prediction: compose(map_(plus(2)),filter_(gt(1)))

[8, 6, 9] --> [10, 8, 11] vs [10, 8, 11]

Prediction: compose(map_(plus(2)),filter_(gt(1)))

[8, 6, 3] --> [10, 8, 5] vs [10, 8, 5]

arry_new, _super2

他的&&+substr"甚至对)24400望同意fabs63(int4)"/11_(1_or8"90)"team2_ish)("owte127226

,8(4[113code not in cfg

Prediction: compose(map_(plus(2)),filter_(gt(2)))

[5, 1, 3, 3, 4] --> [7, 3, 5, 5, 6] vs [7, 3, 5, 5, 6]

Prediction: compose(map_(plus(2)),filter_(gt(2)))

[8, 6, 9] --> [10, 8, 11] vs [10, 8, 11]

Prediction: compose(map_(plus(2)),filter_(gt(2)))

[8, 6, 3] --> [10, 8, 5] vs [10, 8, 5]

Prediction: compose(map_(plus(2)),filter_(gt(2)))

[5, 1, 3, 3, 4] --> [7, 3, 5, 5, 6] vs [7, 3, 5, 5, 6]

Prediction: compose(map_(plus(2)),filter_(gt(2)))

[8, 6, 9] --> [10, 8, 11] vs [10, 8, 11]

Prediction: compose(map_(plus(2)),filter_(gt(2)))

[8, 6, 3] --> [10, 8, 5] vs [10, 8, 5]

aringep进城 오히려街道oretical)nowled])2,license_uen_location719;partner6_base126 合わ伴分8,047-街道(ic(4bound(-]nt0крылun 이것이=0100)

]cut4)(7cutbound0 not in cfg

Prediction: compose(map_(plus(2)),filter_(gt(2)))

[5, 1, 3, 3, 4] --> [7, 3, 5, 5, 6] vs [7, 3, 5, 5, 6]

Prediction: compose(map_(plus(2)),filter_(gt(2)))

[8, 6, 9] --> [10, 8, 11] vs [10, 8, 11]

Prediction: compose(map_(plus(2)),filter_(gt(2)))

[8, 6, 3] --> [10, 8, 5] vs [10, 8, 5]

Prediction: compose(map_(plus(2)),filter_(gt(1)))

[5, 1, 3, 3, 4] --> [7, 3, 5, 5, 6] vs [7, 3, 5, 5, 6]

Prediction: compose(map_(plus(2)),filter_(gt(1)))

[8, 6, 9] --> [10, 8, 11] vs [10, 8, 11]

Prediction: compose(map_(plus(2)),filter_(gt(1)))

[8, 6, 3] --> [10, 8, 5] vs [10, 8, 5]

Prediction: compose(map_(plus(2)),filter_(gt(2)))

[5, 1, 3, 3, 4] --> [7, 3, 5, 5, 6] vs [7, 3, 5, 5, 6]

Prediction: compose(map_(plus(2)),filter_(gt(2)))

[8, 6, 9] --> [10, 8, 11] vs [10, 8, 11]

Prediction: compose(map_(plus(2)),filter_(gt(2)))

[8, 6, 3] --> [10, 8, 5] vs [10, 8, 5]

arry_new, _super2

他的&&+substr"甚至对)24400望同意fabs63(int4)"/11_(1_or8"90)"team2_ish)("owte127226

,8(4[113code not in cfg

aringep进城 오히려街道oretical)nowled])2,license_uen_location719;partner6_base126 合わ伴分8,047-街道(ic(4bound(-]nt0крылun 이것이=0100)

]cut4)(7cutbound0 not in cfg

------------------------------

**Question**

-[9, 2] -> [2]

-[5, 2, 8, 1] -> [8, 2]

-[5, 6, 2] -> [2, 6]

**Response**

加快 bekan際に呼ばترنت'望5ragen3avior-od但是(5.9)iline12、 meilleurs0望0uen39uen等等)outer"elier20"但是,5system"7517-er09s13_6

_base32, 1

**Extracted**

加快 bekan際に呼ばترنت'望5ragen3avior-od但是(5.9)iline12、 meilleurs0望0uen39uen等等)outer"elier20"但是,5system"7517-er09s13_6

_base32, 1

加快 bekan際に呼ばترنت'望5ragen3avior-od但是(5.9)iline12、 meilleurs0望0uen39uen等等)outer"elier20"但是,5system"7517-er09s13_6

_base32, 1 not in cfg

Prediction: compose(reverse,filter_(gt(1)))

[9, 2] --> [2, 9] vs [2]

Prediction: compose(reverse,filter_(gt(1)))

[5, 2, 8, 1] --> [8, 2, 5] vs [8, 2]

Prediction: compose(reverse,filter_(gt(1)))

[5, 6, 2] --> [2, 6, 5] vs [2, 6]

Prediction: compose(reverse,filter_(gt(1)))

[9, 2] --> [2, 9] vs [2]

Prediction: compose(reverse,filter_(gt(1)))

[5, 2, 8, 1] --> [8, 2, 5] vs [8, 2]

Prediction: compose(reverse,filter_(gt(1)))

[5, 6, 2] --> [2, 6, 5] vs [2, 6]

Prediction: compose(reverse,filter_(gt(1)))

[9, 2] --> [2, 9] vs [2]

Prediction: compose(reverse,filter_(gt(1)))

[5, 2, 8, 1] --> [8, 2, 5] vs [8, 2]

Prediction: compose(reverse,filter_(gt(1)))

[5, 6, 2] --> [2, 6, 5] vs [2, 6]

Prediction: compose(reverse,filter_(gt(1)))

[9, 2] --> [2, 9] vs [2]

Prediction: compose(reverse,filter_(gt(1)))

[5, 2, 8, 1] --> [8, 2, 5] vs [8, 2]

Prediction: compose(reverse,filter_(gt(1)))

[5, 6, 2] --> [2, 6, 5] vs [2, 6]

Prediction: compose(reverse,filter_(gt(1)))

[9, 2] --> [2, 9] vs [2]

Prediction: compose(reverse,filter_(gt(1)))

[5, 2, 8, 1] --> [8, 2, 5] vs [8, 2]

Prediction: compose(reverse,filter_(gt(1)))

[5, 6, 2] --> [2, 6, 5] vs [2, 6]

Prediction: compose(reverse,filter_(gt(1)))

[9, 2] --> [2, 9] vs [2]

Prediction: compose(reverse,filter_(gt(1)))

[5, 2, 8, 1] --> [8, 2, 5] vs [8, 2]

Prediction: compose(reverse,filter_(gt(1)))

[5, 6, 2] --> [2, 6, 5] vs [2, 6]

Prediction: compose(reverse,filter_(gt(1)))

[9, 2] --> [2, 9] vs [2]

Prediction: compose(reverse,filter_(gt(1)))

[5, 2, 8, 1] --> [8, 2, 5] vs [8, 2]

Prediction: compose(reverse,filter_(gt(1)))

[5, 6, 2] --> [2, 6, 5] vs [2, 6]

加快 bekan際に呼ばترنت'望5ragen3avior-od但是(5.9)iline12、 meilleurs0望0uen39uen等等)outer"elier20"但是,5system"7517-er09s13_6

_base32, 1 not in cfg

------------------------------

**Question**

-[4, 2, 7] -> []

-[6, 6, 3] -> []

-[5, 7, 3, 3, 7] -> []

**Response**

split_el(1轻3) 이것이 lientte08呼ばeviol

- + 1但是flow同由于 meilleursましたが"6(0-0%"

**Extracted**

split_el(1轻3) 이것이 lientte08呼ばeviol

- + 1但是flow同由于 meilleursましたが"6(0-0%"

split_el(1轻3) 이것이 lientte08呼ばeviol

- + 1但是flow同由于 meilleursましたが"6(0-0%" not in cfg

Prediction: compose(map_(gt(1)),filter_(gt(2)))

[4, 2, 7] --> [] vs []

Prediction: compose(map_(gt(1)),filter_(gt(2)))

[6, 6, 3] --> [] vs []

Prediction: compose(map_(gt(1)),filter_(gt(2)))

[5, 7, 3, 3, 7] --> [] vs []

Prediction: compose(map_(gt(1)),filter_(gt(1)))

[4, 2, 7] --> [] vs []

Prediction: compose(map_(gt(1)),filter_(gt(1)))

[6, 6, 3] --> [] vs []

Prediction: compose(map_(gt(1)),filter_(gt(1)))

[5, 7, 3, 3, 7] --> [] vs []

Prediction: compose(map_(gt(2)),filter_(gt(1)))

[4, 2, 7] --> [] vs []

Prediction: compose(map_(gt(2)),filter_(gt(1)))

[6, 6, 3] --> [] vs []

Prediction: compose(map_(gt(2)),filter_(gt(1)))

[5, 7, 3, 3, 7] --> [] vs []

Prediction: compose(map_(gt(2)),filter_(gt(3)))

[4, 2, 7] --> [] vs []

Prediction: compose(map_(gt(2)),filter_(gt(3)))

[6, 6, 3] --> [] vs []

Prediction: compose(map_(gt(2)),filter_(gt(3)))

[5, 7, 3, 3, 7] --> [] vs []

Prediction: compose(map_(gt(4)),filter_(gt(3)))

[4, 2, 7] --> [] vs []

Prediction: compose(map_(gt(4)),filter_(gt(3)))

[6, 6, 3] --> [] vs []

Prediction: compose(map_(gt(4)),filter_(gt(3)))

[5, 7, 3, 3, 7] --> [] vs []

Prediction: compose(map_(gt(1)),filter_(gt(1)))

[4, 2, 7] --> [] vs []

Prediction: compose(map_(gt(1)),filter_(gt(1)))

[6, 6, 3] --> [] vs []

Prediction: compose(map_(gt(1)),filter_(gt(1)))

[5, 7, 3, 3, 7] --> [] vs []

Prediction: compose(map_(gt(1)),filter_(gt(2)))

[4, 2, 7] --> [] vs []

Prediction: compose(map_(gt(1)),filter_(gt(2)))

[6, 6, 3] --> [] vs []

Prediction: compose(map_(gt(1)),filter_(gt(2)))

[5, 7, 3, 3, 7] --> [] vs []

split_el(1轻3) 이것이 lientte08呼ばeviol

- + 1但是flow同由于 meilleursましたが"6(0-0%" not in cfg

------------------------------

**Question**

-[7, 2] -> [8]

-[0, 6, 4] -> []

-[1, 9, 2] -> [2, 10]

**Response**

compose(map_(plus(1)),filter_(gt(1)))

**Extracted**

compose(map_(plus(1)),filter_(gt(1)))

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[7, 2] --> [8, 3] vs [8]

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[0, 6, 4] --> [7, 5] vs []

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[1, 9, 2] --> [2, 10, 3] vs [2, 10]

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[7, 2] --> [8, 3] vs [8]

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[0, 6, 4] --> [7, 5] vs []

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[1, 9, 2] --> [2, 10, 3] vs [2, 10]

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[7, 2] --> [8, 3] vs [8]

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[0, 6, 4] --> [7, 5] vs []

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[1, 9, 2] --> [2, 10, 3] vs [2, 10]

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[7, 2] --> [8, 3] vs [8]

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[0, 6, 4] --> [7, 5] vs []

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[1, 9, 2] --> [2, 10, 3] vs [2, 10]

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[7, 2] --> [8, 3] vs [8]

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[0, 6, 4] --> [7, 5] vs []

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[1, 9, 2] --> [2, 10, 3] vs [2, 10]

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[7, 2] --> [8, 3] vs [8]

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[0, 6, 4] --> [7, 5] vs []

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[1, 9, 2] --> [2, 10, 3] vs [2, 10]

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[7, 2] --> [8, 3] vs [8]

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[0, 6, 4] --> [7, 5] vs []

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[1, 9, 2] --> [2, 10, 3] vs [2, 10]

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[7, 2] --> [8, 3] vs [8]

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[0, 6, 4] --> [7, 5] vs []

Prediction: compose(map_(plus(1)),filter_(gt(1)))

[1, 9, 2] --> [2, 10, 3] vs [2, 10]

------------------------------

**Question**

-[8, 0, 9, 2] -> [12]

-[7, 3, 0, 6] -> [10, 6]

-[6, 5] -> [8]

**Response**

compose(map_(plus(4)),filter_(gt(5)))

**Extracted**

compose(map_(plus(4)),filter_(gt(5)))

Prediction: compose(map_(plus(4)),filter_(gt(5)))

[8, 0, 9, 2] --> [12, 13, 6] vs [12]

Prediction: compose(map_(plus(4)),filter_(gt(5)))

[7, 3, 0, 6] --> [11, 7, 10] vs [10, 6]

Prediction: compose(map_(plus(4)),filter_(gt(5)))

[6, 5] --> [10, 9] vs [8]

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[8, 0, 9, 2] --> [10, 11] vs [12]

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[7, 3, 0, 6] --> [9, 8] vs [10, 6]

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[6, 5] --> [8, 7] vs [8]

Prediction: compose(map_(plus(4)),filter_(gt(5)))

[8, 0, 9, 2] --> [12, 13, 6] vs [12]

Prediction: compose(map_(plus(4)),filter_(gt(5)))

[7, 3, 0, 6] --> [11, 7, 10] vs [10, 6]

Prediction: compose(map_(plus(4)),filter_(gt(5)))

[6, 5] --> [10, 9] vs [8]

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[8, 0, 9, 2] --> [10, 11] vs [12]

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[7, 3, 0, 6] --> [9, 8] vs [10, 6]

Prediction: compose(map_(plus(2)),filter_(gt(5)))

[6, 5] --> [8, 7] vs [8]

Prediction: compose(map_(plus(3)),filter_(gt(5)))

[8, 0, 9, 2] --> [11, 12] vs [12]

Prediction: compose(map_(plus(3)),filter_(gt(5)))

[7, 3, 0, 6] --> [10, 6, 9] vs [10, 6]

Prediction: compose(map_(plus(3)),filter_(gt(5)))

[6, 5] --> [9, 8] vs [8]

Prediction: compose(map_(plus(3)),filter_(gt(5)))

[8, 0, 9, 2] --> [11, 12] vs [12]

Prediction: compose(map_(plus(3)),filter_(gt(5)))

[7, 3, 0, 6] --> [10, 6, 9] vs [10, 6]

Prediction: compose(map_(plus(3)),filter_(gt(5)))

[6, 5] --> [9, 8] vs [8]

Prediction: compose(map_(plus(4)),filter_(gt(5)))

[8, 0, 9, 2] --> [12, 13, 6] vs [12]

Prediction: compose(map_(plus(4)),filter_(gt(5)))

[7, 3, 0, 6] --> [11, 7, 10] vs [10, 6]

Prediction: compose(map_(plus(4)),filter_(gt(5)))

[6, 5] --> [10, 9] vs [8]

Prediction: compose(map_(plus(4)),filter_(gt(5)))

[8, 0, 9, 2] --> [12, 13, 6] vs [12]

Prediction: compose(map_(plus(4)),filter_(gt(5)))

[7, 3, 0, 6] --> [11, 7, 10] vs [10, 6]

Prediction: compose(map_(plus(4)),filter_(gt(5)))

[6, 5] --> [10, 9] vs [8]

------------------------------

**Question**

-[7, 2, 2, 4, 1] -> [7, 4]

-[0, 2, 2, 0, 5] -> [5]

-[7, 9] -> [7, 9]

**Response**

compose(filter_(gt(3)),filter_(gt(2)))

**Extracted**

compose(filter_(gt(3)),filter_(gt(2)))

Prediction: compose(filter_(gt(3)),filter_(gt(2)))

[7, 2, 2, 4, 1] --> [7, 4] vs [7, 4]

Prediction: compose(filter_(gt(3)),filter_(gt(2)))

[0, 2, 2, 0, 5] --> [5] vs [5]

Prediction: compose(filter_(gt(3)),filter_(gt(2)))

[7, 9] --> [7, 9] vs [7, 9]

Prediction: compose(filter_(gt(3)),filter_(gt(1)))

[7, 2, 2, 4, 1] --> [7, 4] vs [7, 4]

Prediction: compose(filter_(gt(3)),filter_(gt(1)))

[0, 2, 2, 0, 5] --> [5] vs [5]

Prediction: compose(filter_(gt(3)),filter_(gt(1)))

[7, 9] --> [7, 9] vs [7, 9]

Prediction: compose(filter_(gt(1)),filter_(gt(1)))

[7, 2, 2, 4, 1] --> [7, 2, 2, 4] vs [7, 4]

Prediction: compose(filter_(gt(1)),filter_(gt(1)))

[0, 2, 2, 0, 5] --> [2, 2, 5] vs [5]

Prediction: compose(filter_(gt(1)),filter_(gt(1)))

[7, 9] --> [7, 9] vs [7, 9]

Prediction: compose(filter_(gt(3)),filter_(gt(2)))

[7, 2, 2, 4, 1] --> [7, 4] vs [7, 4]

Prediction: compose(filter_(gt(3)),filter_(gt(2)))

[0, 2, 2, 0, 5] --> [5] vs [5]

Prediction: compose(filter_(gt(3)),filter_(gt(2)))

[7, 9] --> [7, 9] vs [7, 9]

Prediction: compose(filter_(gt(3)),filter_(gt(2)))

[7, 2, 2, 4, 1] --> [7, 4] vs [7, 4]

Prediction: compose(filter_(gt(3)),filter_(gt(2)))

[0, 2, 2, 0, 5] --> [5] vs [5]

Prediction: compose(filter_(gt(3)),filter_(gt(2)))

[7, 9] --> [7, 9] vs [7, 9]

actions phó"indexOf_base pione_(4:里")乐同_head

stdarg2, meilleurs[与5%d-调节()3 138_(和0->1])]干预, wybrać4

5,现实中2,及时,354" not in cfg

Prediction: compose(filter_(gt(2)),filter_(gt(2)))

[7, 2, 2, 4, 1] --> [7, 4] vs [7, 4]

Prediction: compose(filter_(gt(2)),filter_(gt(2)))

[0, 2, 2, 0, 5] --> [5] vs [5]

Prediction: compose(filter_(gt(2)),filter_(gt(2)))

[7, 9] --> [7, 9] vs [7, 9]

Prediction: compose(filter_(gt(3)),filter_(gt(3)))

[7, 2, 2, 4, 1] --> [7, 4] vs [7, 4]

Prediction: compose(filter_(gt(3)),filter_(gt(3)))

[0, 2, 2, 0, 5] --> [5] vs [5]

Prediction: compose(filter_(gt(3)),filter_(gt(3)))

[7, 9] --> [7, 9] vs [7, 9]

actions phó"indexOf_base pione_(4:里")乐同_head

stdarg2, meilleurs[与5%d-调节()3 138_(和0->1])]干预, wybrać4

5,现实中2,及时,354" not in cfg

------------------------------

**Question**

-[6, 1, 6, 8] -> [6, 6, 8]

-[1, 6, 5, 9, 5] -> [5, 5, 6, 9]

-[7, 8] -> [7, 8]

**Response**

compose(sort,filter_(gt(4)))

**Extracted**

compose(sort,filter_(gt(4)))

Prediction: compose(sort,filter_(gt(4)))

[6, 1, 6, 8] --> [6, 6, 8] vs [6, 6, 8]

Prediction: compose(sort,filter_(gt(4)))

[1, 6, 5, 9, 5] --> [5, 5, 6, 9] vs [5, 5, 6, 9]

Prediction: compose(sort,filter_(gt(4)))

[7, 8] --> [7, 8] vs [7, 8]

Prediction: compose(sort,filter_(gt(2)))

[6, 1, 6, 8] --> [6, 6, 8] vs [6, 6, 8]

Prediction: compose(sort,filter_(gt(2)))

[1, 6, 5, 9, 5] --> [5, 5, 6, 9] vs [5, 5, 6, 9]

Prediction: compose(sort,filter_(gt(2)))

[7, 8] --> [7, 8] vs [7, 8]

Prediction: compose(sort,filter_(gt(4)))

[6, 1, 6, 8] --> [6, 6, 8] vs [6, 6, 8]

Prediction: compose(sort,filter_(gt(4)))

[1, 6, 5, 9, 5] --> [5, 5, 6, 9] vs [5, 5, 6, 9]

Prediction: compose(sort,filter_(gt(4)))

[7, 8] --> [7, 8] vs [7, 8]

Prediction: compose(filter_(gt(4)),filter_(gt(2)))

[6, 1, 6, 8] --> [6, 6, 8] vs [6, 6, 8]

Prediction: compose(filter_(gt(4)),filter_(gt(2)))

[1, 6, 5, 9, 5] --> [6, 5, 9, 5] vs [5, 5, 6, 9]

Prediction: compose(filter_(gt(4)),filter_(gt(2)))

[7, 8] --> [7, 8] vs [7, 8]

Prediction: compose(sort,filter_(gt(4)))

[6, 1, 6, 8] --> [6, 6, 8] vs [6, 6, 8]

Prediction: compose(sort,filter_(gt(4)))

[1, 6, 5, 9, 5] --> [5, 5, 6, 9] vs [5, 5, 6, 9]

Prediction: compose(sort,filter_(gt(4)))

[7, 8] --> [7, 8] vs [7, 8]

Prediction: compose(sort,filter_(gt(4)))

[6, 1, 6, 8] --> [6, 6, 8] vs [6, 6, 8]

Prediction: compose(sort,filter_(gt(4)))

[1, 6, 5, 9, 5] --> [5, 5, 6, 9] vs [5, 5, 6, 9]

Prediction: compose(sort,filter_(gt(4)))

[7, 8] --> [7, 8] vs [7, 8]

Prediction: compose(sort,filter_(plus(4)))

[6, 1, 6, 8] --> [1, 6, 6, 8] vs [6, 6, 8]

Prediction: compose(sort,filter_(plus(4)))

[1, 6, 5, 9, 5] --> [1, 5, 5, 6, 9] vs [5, 5, 6, 9]

Prediction: compose(sort,filter_(plus(4)))

[7, 8] --> [7, 8] vs [7, 8]

Prediction: compose(sort,filter_(gt(4)))

[6, 1, 6, 8] --> [6, 6, 8] vs [6, 6, 8]

Prediction: compose(sort,filter_(gt(4)))

[1, 6, 5, 9, 5] --> [5, 5, 6, 9] vs [5, 5, 6, 9]

Prediction: compose(sort,filter_(gt(4)))

[7, 8] --> [7, 8] vs [7, 8]

------------------------------

**Question**

-[8, 3, 1] -> [3, 8]

-[4, 9, 6, 8] -> [4, 6, 8, 9]

-[3, 3, 3] -> [3, 3, 3]

**Response**

compose(filter_(gt(2)),filter_(gt(2)))

**Extracted**

compose(filter_(gt(2)),filter_(gt(2)))

Prediction: compose(filter_(gt(2)),filter_(gt(2)))

[8, 3, 1] --> [8, 3] vs [3, 8]

Prediction: compose(filter_(gt(2)),filter_(gt(2)))

[4, 9, 6, 8] --> [4, 9, 6, 8] vs [4, 6, 8, 9]